The Clean Architecture in Python

@brandon_rhodes

PyCon Ireland

2013

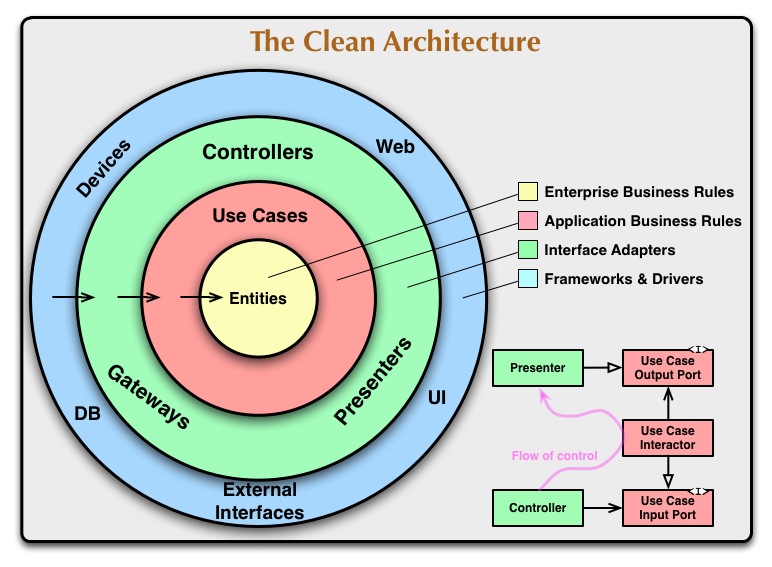

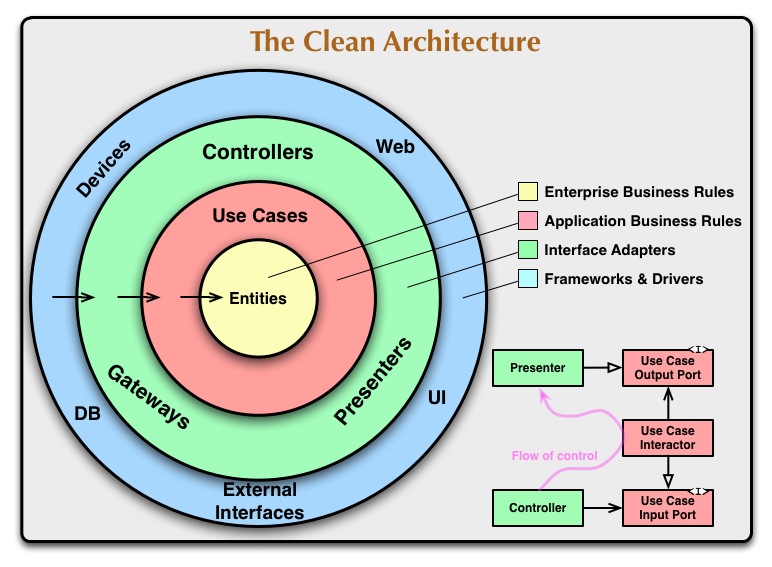

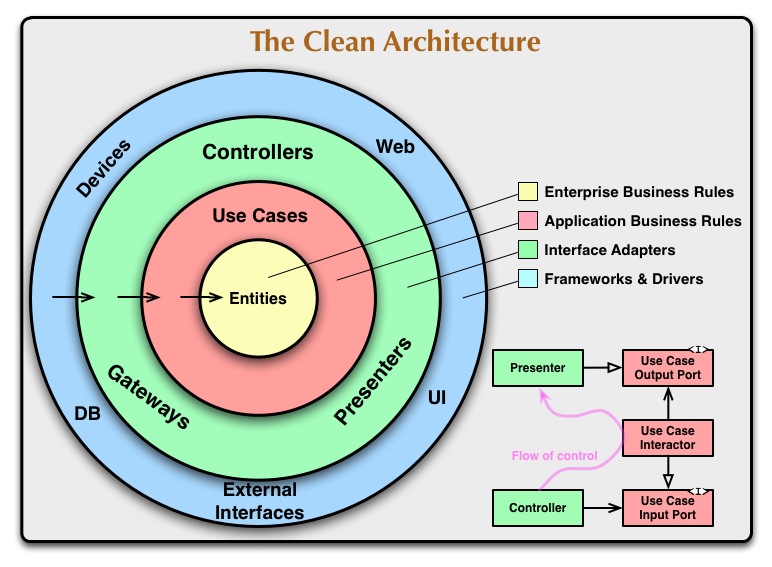

The inspiration

Uncle Bob Martin’s

Clean Architecture

http://blog.8thlight.com/uncle-bob/2011/11/22/Clean-Architecture.html

http://blog.8thlight.com/uncle-bob/2012/08/13/the-clean-architecture.html

The pith

We programmers spontaneously

use subroutines backwards

subroutine

- Python function

- Python method

For how long have

programmers tended to use

subroutines backwards?

60 years

1952

ACM national meeting

Pittsburgh, Pennsylvania

THE USE OF SUB-ROUTINES IN PROGRAMMES

D. J. Wheeler

Cambridge & Illinois Universities

context

Typical computer:

1,000 words of RAM,

1,000 operations per second,

required a dozen people

How complex could programming

even be with only 1k of memory?

Wheeler (1952)

“the preparation of a

library sub-routine requires

a considerable amount of work

“However, even after it has

been coded and tested there still

remains the considerable task of writing

a description

so that people not acquainted

with the interior coding can

nevertheless use it easily.

“This last task may be the most difficult.”

What does Wheeler advertise

subroutines as being good at?

Hiding complexity

“All complexities should —

if possible — be buried

out of sight.”

Our mistake?

Our mistake

- Bury I/O

- Fail to bury anything else

import requests # Listing 1

from urllib import urlencode

def find_definition(word):

q = 'define ' + word

url = 'http://api.duckduckgo.com/?'

url += urlencode({'q': q, 'format': 'json'})

response = requests.get(url)

data = response.json()

definition = data[u'Definition']

if definition == u'':

raise ValueError('that is not a word')

return definition

def find_definition(word):

q = 'define ' + word

url = 'http://api.duckduckgo.com/?'

url += urlencode({'q': q, 'format': 'json'})

response = requests.get(url)

data = response.json()

definition = data[u'Definition']

if definition == u'':

raise ValueError('that is not a word')

return definition

def find_definition(word): # Listing 2

q = 'define ' + word

url = 'http://api.duckduckgo.com/?'

url += urlencode({'q': q, 'format': 'json'})

data = call_json_api(url)

definition = data[u'Definition']

if definition == u'':

raise ValueError('that is not a word')

return definition

def call_json_api(url):

response = requests.get(url)

data = response.json()

return data

def call_json_api(url):

response = requests.get(url)

data = response.json()

return data

Reusable

Isolates API knowledge

Single import requests

Isolates API knowledge

True story: that

code was initially

import json

...

response = requests.get(url)

text = response.text

data = json.loads(text)

def call_json_api(url):

response = requests.get(url)

data = response.json()

return data

Reusable

Isolates API knowledge

Single import requests

The fatal mistake

Stopping once the I/O has

disappeared into a subroutine

We privilege I/O by

granting it a subroutine,

leaving our logic stranded

and tightly coupled

Q:

We have hidden I/O,

but have we really

decoupled it?

Pace Wheeler,

hiding is not enough

def find_definition(word): # Listing 2

q = 'define ' + word

url = 'http://api.duckduckgo.com/?'

url += urlencode({'q': q, 'format': 'json'})

data = call_json_api(url)

definition = data[u'Definition']

if definition == u'':

raise ValueError('that is not a word')

return definition

def call_json_api(url):

response = requests.get(url)

data = response.json()

return data

def find_definition(word): # Listing 3

url = build_url(word)

data = call_json_api(url)

return pluck_definition(data)

def build_url(word):

q = 'define ' + word

url = 'http://api.duckduckgo.com/?'

url += urlencode({'q': q, 'format': 'json'})

return url

def pluck_definition(data):

definition = data[u'Definition']

if definition == u'':

raise ValueError('that is not a word')

return definition

Claim

Listing 3 is an architectural success

while the others were failures

Listing 3 shows in miniature what

the Clean Architecture does

for entire applications

def find_definition(word): # Listing 3

url = build_url(word)

data = call_json_api(url)

return pluck_definition(data)

The coupling between

logic and I/O is isolated

to a small procedure

def find_definition(word): # Listing 3

url = build_url(word)

data = call_json_api(url)

return pluck_definition(data)

Eminently readable

because it remains at a

single level of abstraction

def find_definition(word): # Listing 3

url = build_url(word)

data = call_json_api(url)

return pluck_definition(data)

These names document

what each section

of code is doing

XP

# Build the URL

q = 'define ' + word

url = 'http://api.duckduckgo.com/?'

url += urlencode({'q': q, 'format': 'json'})

Replace comments with names:

def build_url(word):

q = 'define ' + word

url = 'http://api.duckduckgo.com/?'

url += urlencode({'q': q, 'format': 'json'})

Our Architecture

Listing 1

↘

procedure

Listing 2

↘

procedure

↘

i/o procedure

Listing 3

↘

procedure

↘

pure function

↘

i/o procedure

↘

pure function

Testing

How would we have

tested listing 1 or 2?

Goal

Test the code without

calling Duck Duck Go

Two techniques

- Dependency injection

- With mock.patch()

Dependency injection

2004 — Martin Fowler

Make the I/O library

itself a parameter

import requests

def find_definition(word, requests=requests):

q = 'define ' + word

url = 'http://api.duckduckgo.com/?'

url += urlencode({'q': q, 'format': 'json'})

response = requests.get(url)

data = response.json()

definition = data[u'Definition']

if definition == u'':

raise ValueError('that is not a word')

return definition

class FakeRequestsLibrary(object):

def get(self, url):

self.url = url

return self

def json(self):

return self.data

def test_find_definition():

fake = FakeRequestsLibrary()

fake.data = {u'Definition': 'abc'}

definition = find_definition(

'testword', requests=fake)

assert definition == 'abc'

assert fake.url == (

'http://api.duckduckgo.com/'

'?q=define+testword&format=json')

Problems

- Your mock is not the real library

- This might look simple for one service

But a procedure that also

needs a database and filesystem

will need lots of injection

A high-level function

needs every single service

required by its subroutines

↘

big_procedure(web=web, db=db, fs=fs)

↘

smaller_procedure(web=web, db=db)

↘

little_helper(web=web)

A dynamic language like

Python has ways around

dependency injection

from mock import patch

def test_find_definition():

fake = FakeRequestsLibrary()

fake.data = {u'Definition': u'abc'}

with patch('requests.get', fake.get):

definition = find_definition('testword')

assert definition == 'abc'

assert fake.url == (

'http://api.duckduckgo.com/'

'?q=define+testword&format=json')

DI or patch()

Either way,

awkward

sad

How does testing improve

when we factor out our

logic as in Listing 3?

def find_definition(word): # Listing 3

url = build_url(word)

data = call_json_api(url)

return pluck_definition(data)

def build_url(word):

q = 'define ' + word

url = 'http://api.duckduckgo.com/?'

url += urlencode({'q': q, 'format': 'json'})

return url

def pluck_definition(data):

definition = data[u'Definition']

if definition == u'':

raise ValueError('that is not a word')

return definition

By definition, pure functions

can be tested using only data

def test_build_url():

assert build_url('word') == (

'http://api.duckduckgo.com/'

'?q=define+word&format=json')

def test_build_url_with_punctuation():

assert build_url('what?!') == (

'http://api.duckduckgo.com/'

'?q=define+what%3F%21&format=json')

def test_build_url_with_hyphen():

assert build_url('hyphen-ate') == (

'http://api.duckduckgo.com/'

'?q=define+hyphen-ate&format=json')

- No special set-up

- No special preparation

- Test calls are symmetricwith normal calls

import pytest

def test_pluck_definition():

assert pluck_definition(

{u'Definition': u'something'}

) == 'something'

def test_pluck_definition_missing():

with pytest.raises(ValueError):

pluck_definition(

{u'Definition': u''}

)

A symptom of coupling

call_test(good_url, good_data)

call_test(bad_url1, whatever)

call_test(bad_url2, whatever)

call_test(bad_url3, whatever)

call_test(good_url, bad_data1)

call_test(good_url, bad_data2)

call_test(good_url, bad_data3)

So let’s talk architecture

“In general, the further in you go,

the higher level the software becomes.

The outer circles are mechanisms.

The inner circles are policies.”

“The important thing is

that isolated, simple data structures

are passed across the boundaries.”

“When any of the external parts

of the system become obsolete, like

the database, or the web framework,

you can replace those obsolete elements

with a minimum of fuss.

def find_definition(word): # Listing 3

url = build_url(word)

data = call_json_api(url)

return pluck_definition(data)

def build_url(word):

q = 'define ' + word

url = 'http://api.duckduckgo.com/?'

url += urlencode({'q': q, 'format': 'json'})

return url

def pluck_definition(data):

definition = data[u'Definition']

if definition == u'':

raise ValueError('that is not a word')

return definition

How do you test the

top-level “procedural glue”?

Gary Bernhardt

PyCon talks:

- Units Need Testing Too

- Fast Test, Slow Test

- Boundaries

“Imperative shell”

that wraps and uses your

“functional core”

Functional core — Many fast unit tests

Imperative shell — Few integration tests

This should only require

one or two integration tests!

def find_definition(word): # Listing 3

url = build_url(word)

data = call_json_api(url)

return pluck_definition(data)

Functional programming

LISP, Haskell, Clojure, F#

Functional languages naturally

lead you to process data structures

while avoiding side-effect I/O

# I/O as a side effect

def uppercase_words(wordlist):

for word in wordlist:

word = word.upper()

print word

# Logic with zero side-effects

def process_words(wordlist):

return [word.upper() for word in wordlist]

# I/O goes outside of logic

def procedural_glue(wordlist):

upperlist = process_words(wordlist)

for word in upperlist:

print word

Procedural code:

Output as-you-go

Functional code:

Each stage produces a data structure,

that gets output at the end

Engineering students

will tell you that Statics class

is easier than Dynamics class

Why functional?

Because of immutability?

My guess

The biggest advantage of data in

a functional programming style

is not its immutability

It is simply the fact

that it is data!

Fred Brooks

1975

“The bearing of a child

takes nine months, no matter

how many women are assigned.”

“Show me your flowchart and

conceal your tables, and I shall

continue to be mystified.

Show me your tables, and I won’t

usually need your flowchart;

it’ll be obvious.”

1986

McIlroy vs. Knuth

Knuth — Literate programming

“Given a text file and an integer k,

print the k most common words in the file

(and the number of their occurrences)

in decreasing frequency.”

Knuth: 10 pages of Pascal

McIlroy:

“Knuth’s solution is to tally in an

associative data structure each word

as it is read from the file.

“The data structure is a trie, with 26-way

(for technical reasons actually 27-way)

fan-out at each letter. To avoid wasting

space all the (sparse) 26-element arrays

are cleverly interleaved in one common

arena, with hashing used to assign homes”

McIlroy: 6-line shell script

tr -cs A-Za-z '\n' |

tr A-Z a-z |

sort |

uniq -c |

sort -rn |

sed ${1}q

“Every one was written first

for a particular need, but untangled

from the specific application.”

Traditional lesson:

Use small simple tools that

can easily be linked together

Python!

- Lists

- Dictionaries

- Comprehensions

- Generator functions

- Generator expressions

- For loops

But I want to draw

a different lesson:

The shell script is simpler

because it operates through the

stepwise transformation of data

tr -cs A-Za-z '\n' |

tr A-Z a-z |

sort |

uniq -c |

sort -rn |

sed ${1}q

This approach continually surfaces

intermediate results using a magnificent

data structure: plain-text lines

tr -cs A-Za-z '\n' |

tr A-Z a-z |

sort |

uniq -c |

sort -rn |

sed ${1}q

So why immutability?

Gary Bernhardt:

distributed computing

See Jonathan Harrington’s

“The Data Storm” talk from

the previous session here

at PyCon Ireland

Data and transforms are easier

to understand and maintain

than coupled procedures

If that is the case,

then Python has been evolving

in exactly the right direction

for i in range(len(items)):

item[i] = transform(item[i])

Python 2.0 (October 2000)

↓

items = [tranform(item) for item in items]

items = list(load_items())

items.sort()

for item in items:

...

Python 2.4 (November 2004)

↓

for item in sorted(items):

..

Remember: Python has several kinds

of callable beyond plain sub-routines!

- generators

- context managers

generators

Imagine a compound iteration that

occurs repeatedly through your code

for college in university.colleges:

for school in college.schools:

for department in school.departments:

...

def all_departments(college):

for college in university.colleges:

for school in college.schools:

for department in school.departments:

yield department

def process1():

...

for department in all_departments(college):

....

def process2():

...

names = [department.name for department

in all_departments(college)]

....

context managers

r = get_resource()

r.get_ready()

try:

use(r)

finally:

r.close()

with get_resource() as r:

use(r)

Two real-world examples

- Skyfield

- Luca

Skyfield

Object-based API backed by

dozens of pure functions that

implement the actual operations

The miserable thing about a

method is that it implicitly depends

upon the state of the whole object

The beautiful thing about a

function is that it explicitly depends

upon a specific list of arguments

>>> import this

The Zen of Python, by Tim Peters

Beautiful is better than ugly.

Explicit is better than implicit.

...

Luca

Temptation

Compute output fields as

the form is running, writing

their text into the PDF

Instead: phases

First read the entire tax form

Then do all the computations

Finally write to the PDF

Luca’s approach of processing a

form in phases made an unexpected

improvement in my tracebacks!

A → B → C → D

Coupled procedures:

Very deep tracebacks

when D raises exceptions

B

↗↙

A ←→ C

↘↖

D

Decoupled functions:

Get shallow tracebacks,

only a few frames high

The pith

Old

To get rid of I/O,

make it subordinate

New

To really get rid of someone,

make them a manager!

Let’s return to Wheeler

In 1952 he gave us the “sub-routine”

We have yet to realize

its full power and promise!

“When a programme has been

made from a set of sub-routines the

breakdown of the code is more complete

than it otherwise would be.

“This allows the coder

to concentrate on one section

of the program at a time without

the overall detailed programme

continually intruding.

“Thus the sub-routines

can be more easily coded and

be tested in isolation from the

rest of the programme.

“When the entire programme

has to be tested it is with the

foreknowledge that the incidence

of mistakes in the subroutine is —

zero

“(or at least one order

of magnitude below that of the

untested portions of the programme!)”

☘

@brandon_rhodes